September 20, 2009

How my netbook taught me to love xmonad

I've had this low-level urge to try a new window manager for a few months now. I work on a Linux box (Ubuntu) daily and mostly run a number of terminals, GNU Emacs, Mozilla Firefox, and Google Chrome. Nothing too fancy, really. Oh, and a shitty VPN client.

Background

Most of the time I'm doing this in front of a 24" (or larger) monitor running at 1920x1200, so there's a lot of screen real estate. Yet I was always annoyed by how much time I spent moving windows around or trying to find the optimal layout--always reaching for the mouse.

Years ago when 1024x768 was the norm, I ran a heavily customized fvwm2 and enjoyed it. But then I made the move to Windows for a few years and came back to Linux with Ubuntu/Gnome as my "desktop."

The Netbook

Months ago I wrote about how I love my Samsung NC10. When I'm not at my desk, I'll often use PuTTY to login, resume my screen session(s), and continue working. For what it's worth, I find that the free DejaVu Fonts (specifically the Monospaced one) works exceptionally well.

What I realized is that my method of having one terminal in full-screen mode on each "desktop" (thanks to the VirtualWin virtual desktop manager) is surprisingly productive, even on the little 10" screen. At first I considered this a fluke and attributed it mainly to the novelty of working this way. But after a while I realized that it was the focus that this setup enforces. There simply isn't enough room to have a browser on screen to distract me while I'm coding something, reading email, etc.

I really need to focus one or a few tightly realted tasks. The cognitive overload of having the whole Internet available really gets pushed off-screen and mostly out of mind.

Trying xmonad

After a discussion in our chatroom at work the other day, I finally decided to give a new window manger a try: xmonad. A big help was Tom's Introduction to the xmonad Tiling Window Manager which gave me just the information I needed to get started.

I used it most of Friday and a bit off and on Saturday, both on my primary work computer and my "home" Linux desktop machine. The experience has been surprisingly positive so far. Most of the hassles have revolved around re-training my hands to learn some new keyboard shortcuts and finding replacements for the few GUI things that Gnome provided on my previous desktop.

On thing I particularly like is that most of the keybindings seem very sane out of the box with xmonad. I haven't really needed to customize anything yet. I have found that a couple keystrokes that I use in GNU Emacs appear to be intercepted by xmonad and I haven't found an easy way to undo that or at least discover what they're supposed to do: Alt-w and Alt-q are the two I've noticed.

I also needed to resurrect an old xmodmap file that I could use to turn my CAPS LOCK into a Control key and re-discover the right xset command to set my key repeat rate higher than the default: xset r rate 250 30.

Other than those few nits, it's been pretty smooth sailing. I definitely feel like I'll be more productive in the long run a result of switching.

Have you tried a tiling window manager? Did you stick with it?

August 03, 2009

Google Chrome is the New Firefox, and Firefox the new IE

I spent too long on Friday screwing around with stuff on my work laptop in an effort to make Firefox's apparent performance not SUCK ASS. Ever since I upgraded to Ubuntu 9.04 I've been somewhat unhappy, mostly as a result of the well publicized issues with Intel Video on Ubuntu 9.04.

I read about possible hope with upgrading the driver which also required a kernel upgrade, so I did both and rebooted. And, as I hoped, video seemed a bit snappier.

But Firefox still SUCKED ASS.

At this point I was REALLY PISSED. Sure my new video was nice and all but making new tabs (or switching between them) was still slow, and the disaster known as the "awesome bar" (how to disable) still sucked.

So on a whim I went and installed Google Chrome. It totally rocks on my Samsung NC10 netbook (running WinXP), so I figured what not give it a try.

It turns out that Chrome on Linux is DRAMATICALLY FASTER THAN FIREFOX!.

It's been quite stable on Windows, so I'm hoping the same is true on Linux and I can just switch over to it. As of now, Firefox is my primary browser on only half my computers. Chrome seems to be slowly displacing it, just like Firefox replaced the bloated pig known as Mozilla years ago (and the long since stagnant IE on Windows).

It's funny. Browsers seem to be like Internet companies. Every few years a new, small, faster one comes along to kill off some (or all) of the previous generation. I guess this is just the latest in that constant evolution.

It'll be interesting to see how this new competition really affects Mozilla Firefox.

I spend most of my day in gnome-terminal (to screen, mutt, irssi, etc.), GNU Emacs, and a browser. When they're not fast and stable, my life sucks.

June 16, 2009

My Drizzle Article in Linux Magazine (XtraDB and Sphinx too!)

After a few years off, I've been doing some writing for Linux Magazine (which is on-line only) again recently. First off, my just published feature article is Drizzle: Rethinking the MySQL Database Kernel. As you might have guessed, it looks at Drizzle and some of the reasoning behind forking and re-working MySQL.

I'm also writing a weekly column that we've been calling "Bottom of the Stack" (RSS) which started a few weeks ago. Recent articles are:

The basic idea is that I'll be writing about back-end data processing and systems--the sort of stuff that lives in the bottom half of the traditional LAMP stack.

If you have ideas of stuff you'd like to cover, please drop me a line.

As a side note, I wrote my first article for Linux Magazine back in June of 2001: MySQL Performance Tuning. Those were the MySQL 3.23 days. How time flies!

An amazing credit to some of the folks involved with Linux Magazine, all of my past writings are available there.

August 07, 2006

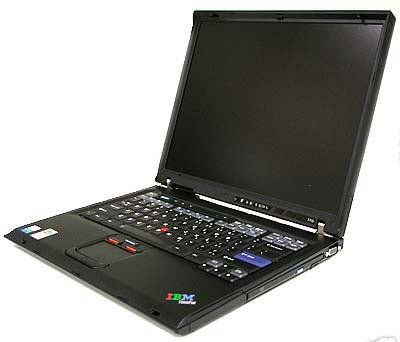

Ubuntu Linux now on my new Thinkpad T43p: Wow!

After reading report after report of people using Ubuntu Linux on various flavors of desktop and laptop computers, I've finally decided to give it a try.

Last weekend a Thinkpad T43p (80GB disk, 2GB RAM, 1600x1200, etc) arrived on my doorstep and patiently waited for me to finish studying for my FAA written test (passed it this morning, thank you very much).

Now, it worth noting a few things.

- I'm not new to Linux on laptops. This is the 4th upon which I've attempted to run Linux. Over the last six years, I've probably spent 1.5 to 2 of them with Linux on a laptop as my primary computer.

- I'm not new to Thinkpads. The T43p that I now own (thanks to eBay) was preceded by the following, in reverse chronological order: T23, T21, 600E, and a 380D.

- In recent years I've become quite impatient with software. If it doesn't actually make my life better, I ditch it much faster.

- I had high expectations of Ubuntu, thanks to all the hype.

Given all that, I'm shocked and amazed. It works. It just works.

I booted from the Ubuntu 6.06 "live" CD and ran the installer. I then rebooted the notebook and found that it detected my wireless interface just fine. The screen was properly detected at 1600x1200, the sound worked, and the TrackPad worked fine.

Then came the real test. I decided to exercise the power management features. Suspend to disk (hibernate) and suspend to RAM (suspend) worked. In both cases, it worked as well as in Windows (better in some ways) and nearly as good as a Powerbook.

I cannot overstate how important this is: Ubuntu is the first real "desktop" Linux I've ever seen. There's a lot of polish to it, most of the "right" things have been hidden from non-Linux geeks, and it just works.

I've read so many other stories like this but had to see it for myself.

If you've been waiting years and years for desktop Linux (or laptop Linux) to finally arrive, give Ubuntu a shot. Seriously.

It's good to have a Linux laptop again. It's even better not to have to fight it. It's the freedom and power of a Debian-based Linux without all the hassle. :-)

August 01, 2006

Sun Should Use apt

It's funny, when I read Tim Bray saying this:

Apt-get is just so unreasonably fucking great. Why arenít we using it for Solaris updates?

My first reaction was "I've been wondering that for YEARS!" Of course, I wondered the same thing about Red Hat for nearly as long.

It's funny how open source solutions often outdo the commercial guys--even the ones who "get" open source.

Maybe Sun should offer a Debian style package repository for Solaris? I know there are some unofficial attempts at this, but I'm talking about a full-blown supported setup.

November 27, 2005

Resizable Textarea Extension for Firefox 1.5

The Resizable Textarea extension for Firefox has been incredibly useful for blogs and on-line forms (as well as forums). But ever since upgrading to Firefox 1.5, I've been sad. Version 0.1a of the extension wasn't packaged to work in Firefox 1.5.

Today I got sick of that and decided to fix it. For some reason, I'm not allowed to post a reply on the original site. So here I present to you the Resizable Textarea Extension 0.1b. This differs from the original version only in the metadata provided to Firefox. I had to increase the alowable maximum Firefox version number--that's it.

Install at your own risk, of course.

Also, if you still haven't tried Firefox (especially version 1.5), what are you waiting for? :-)

Update: Please see this post if you're using Firefox 2.0 or newer.

November 04, 2005

Extend Firefox, Win Prizes

If you haven't yet seen the

news, there's a contest going on called Extend

Firefox:

If you haven't yet seen the

news, there's a contest going on called Extend

Firefox:

Calling all Firefox enthusiasts: it's time to submit your work for the chance to win great prizes and earn bragging rights as one of the premier Extension developers for Firefox! We're looking for new and upgraded Firefox Extensions that focus on enhancing the Firefox experience, especially those that take advantage of new features in Firefox 1.5.

Jesse Ruderman has a quick overview of new Firefox 1.5 features that you're encouraged to take advantage of. The computer you could win looks pretty nice.

Need help or ideas? Try the new IRC channel. Or look at the list that Jesse published. (Bonus points if you make his Google idea work with Yahoo too...)

Disclaimer: I'm one of the judges. I do not take bribes. Well, not often. ;-)

August 06, 2005

Making Firefox Handle Multiline Pasted ("Broken") URLs

Andrei provides an excellent tip for Firefox users in Pasting Wrapped URLs:

Here's another Mozilla/Firefox tip: if you copy a URL wrapped over multiple lines from somewhere and try to paste it into the address bar, you will end up only with the first line of it. To fix it, go to about:config and change editor.singleLine.pasteNewlines setting to 3 or add:

user_pref("editor.singleLine.pasteNewlines", 3);to your user.js file. Now all the line breaks will be removed upon pasting.

Excellent! I'd been looking for such a feature.

June 10, 2005

jwz switches from Linux to Mac (for non-servers)

This post amuses the heck out of me.

Remember last week, when I tried to buy exactly the same audio card that 99.99% of the world owns and convince Linux to be able to play two sounds at once? Yeah, turns out, that was the last straw. I bought an iMac, and now I play my music with iTunes.

There really is something to be said for using an OS that consumer hardware vendors actually give a shit about....

I plugged a mouse with three buttons and a wheel into the Mac, and it just worked without me having to read the man page on xorg.conf or anything. Oh frabjous day.

And the fact that he ends with this is just classic:

Dear Slashdot: please don't post about this. Screw you guys.

Classic, I tell you.

May 02, 2005

Firefox Market Share on O'Reilly Sites

Over on O'Reilly Radar, Tim writes about the browser stats they're seeing across the O'Reilly Network and summarizes by saying:

In short, during the past year, Firefox has basically wiped out the Netscape browser, and has taken 20 points of share from IE. Safari browser has grown fractionally, but given the rise in Apple's market share, these numbers would suggest that a good percentage of Apple users are switching to Firefox as well. Meanwhile, Janco reports that Firefox share in the broader market has surged past 10%.

The interesting thing to me is how vastly the numbers differ between tech oriented sites like those O'Reilly hosts, and the more mainstream sites we run at Yahoo.

Firefox is definitely on the rise in the Yahoo logs, but it's not even close to crossing the 50% mark yet. And from what I hear via friends at other large sites (AOL, Microsoft, etc) they're in the same boat we are.

It'll be fun to watch this trend continue. How long does it take for "the rest of the world" to catch up? Will it ever? (I suspect not.) Will we reach a point of equilibrium, maybe? Who knows.

Either way, Firefox recently hit 50,000,000 downloads--quite an impressive accomplishment!

February 14, 2005

RAM Upgrades Are A Wonderful Thing

The laptop that I was bitch-slapped for using had one problem: it came with only 512MB of memory. In my mind that's laughable. This is 2005!

I can't imagine using a modern computer with less than 1GB of ram. When you factor in Thunderbird, Firefox, Emacs, two IM clients, Office Apps, PuTTY, the Gimp, and the various other stuff I run daily, it's not difficult to see why.

Windows likes memory.

Come to think of it, what doesn't? My Powerbook as 1GB. My Linux box has 1GB. My Windows desktop has 2GB. Most of my co-located servers have more than 1GB.

So I got an upgrade this morning to 1GB and, as expected, it has made a world of difference. No more swapping mid-way through the day. As the tech guy was doing the swap, I commented on how most of the time people who want a "faster" computer simply need more memory. He confirmed that a RAM upgrade often does the trick.

Consider this my public service announcement for the day.

February 12, 2005

Remote Linux Kernel Upgrade Success

For the first time in history, I performed a remote kernel upgrade on the server that was previously cracked (goodbye local root exploit) and it actually worked when I rebooted the box in the middle of the night.

As a devoted Debian user, here's what I did...

Get New Kernel Source, Unpack It, Make Symlink

# apt-get install kernel-source-2.4.27 # cd /usr/src # bunzip -c kernel-source-2.4.27.tar.bz2 | tar -xvf - # rm linux # ln -s kernel-source-2.4.27 linux

Copy Old Kernel Config, Update It

# cd linux # cp ../kernel-source-2.4.23/.config . # make oldconfig (answer No to everything)

Compile Kernel, Build Debian Package, Install

This particular machine uses a non-initrd kernel, so I do this:

# make-kpkg clean # make-kpkg kernel_image

Otherwise I'd do this to get an initrd-based kernel package:

# make-kpkg clean # make-kpkg --initrd kernel-image

In either case, installation of the new kernel is trivial, thanks to Debian's kernel-package:

# cd .. # dpkg -i kernel-image-VERSION_i386.deb

That populates everything needed in the /boot directory and moves the necessary symlink to make the current kernel image the backup (old) kernel image. It then will offer to make a boot floppy and run lilo.

Cross Fingers, Reboot

After I double checked that everything looked right in the / and /boot directories and that /etc/lilo.conf was reasonable, I did asked for a reboot and crossed my fingers.

# reboot

And left a command running in another window:

$ ping family2.zawodny.com

When it started returning pings again, I knew things were in decent shape. Twenty seconds later I was able to ssh in and verify that things were all happy:

jzawodn@family2:~$ uname -a Linux family2 2.4.27 #1 SMP Sat Feb 12 01:03:32 PST 2005 i686 GNU/Linux

Yeay!

Now I can start moving everything back off the 3.5 year old backup machine onto this much faster box.

February 07, 2005

Firefox: Bigger Than AOL?

Firefox is the energizer bunny of web browsers. I just keeps going and going and going.

Asa noticed this and began to wonder if Firefox has supoassed AOL's 22.2 million users.

We don't have solid usage stats and I've been mostly going on the numbers I see from OneStat, TheCounter, and WebSideStory. The only problem there is that while they all generally agree that Gecko is somewhere between 7% and 8% of the market, neither say how large that market is. I suspect that they're assuming a global share of between 500 million and 800 million users (though this is nothing more than a guess.)

If that's anywhere close to reality, then there should be between 35 million and 60 million Gecko users. Assuming that a bit more than half of those are Firefox users (and the rest are legacy Seamonkey users or Netscape users) then there's a very real chance that Firefox usage is very close to or already past the size of AOL's user base.

The adoption rate of Firefox is nothing short of amazing. The IE team at Microsoft has to be busting their butts on the next version of IE.

Expect to see lots more good Firefox stuff coming.

Let's just hope this get patched in a hurry.

January 31, 2005

Tivo's HME SDK

Tivo has released a Software Development Kit (SDK) for their broad-band connected Tivo units:

HME is the code name for TiVo’s powerful new open platform for applications that are displayed and controlled by broadband-connected TiVo Series2 DVRs. HME applications are written using the Java programming language and can run on home PC’s or remote servers hosted by TiVo. At this time, HME applications can not control any of the TiVo DVR’s scheduling, recording, or video playback capabilities. Developers use the HME software developer kit (SDK) to create these applications. The SDK is released under the Common Public License (CPL).

This means that Yahoo (or anyone) could start releasing applications for the Tivo.

Wow, a bold and wise move.

January 27, 2005

Use a Modern Perl with Kwiki

To anyone else considering Kwiki (an excellent minimal yet extensible Perl Wiki), make sure you're running a recent Perl. I spent far too long attempting to set it up under Perl 5.6.x recently only to discover that it was utterly painless under Perl 5.8.x.

I wish I had thought to try that hours earlier.

That concludes this public service announcement.

January 14, 2005

Where will you store your data?

Over on SiliconBeat, I ran across their How big is Bloglines? story. Before I get into what I really wanted to say, I feel compelled to say that I think they (SiliconBeat) are doing a very good job of not only reporting what they learn, but also internalizing it. I really get a sense that they get what they're writing about. That doesn't always come across in journalist weblogs.

Keep it up, guys.

Now the interesting bit that jumped out at me is related to something I've been thinking about a lot lately. It was a quote they pulled from Paul Graham's book Hackers and Painters:

"The idea of 'your computer' is going away and being replaced by 'your data.' You should be able to get at your data from any computer. Or rather any client, and a client doesn't have to be your computer."

The more I find myself using increasingly larger and cheaper USB memory sticks, my colocated server, and on-line services like Flickr, I realize how my desktop and laptop computers are becoming less and less important in the grand scheme of things. And when I think about how popular web-based mail systems (Y! Mail, Hotmail, GMail) are, it's apparent that a lot of folks are keeping their data elsewhere.

So if we fast forward another year or three, where's most of your data going to live? On your hard disk? You iPod? A 32GB USB stick? Off in "the cloud"?

What do you think?

January 02, 2005

Denial and Television 2.0: Quotes from The BitTorrent Effect

If you're at all interested in copyright, the movie industry, BitTorrent, or Bram Cohen (its creator), I recommend reading The BitTorrent Effect from the January 2005 issue of Wired.

Here are two excerpts that I enjoyed:

Cohen knows the havoc he has wrought. In November, he spoke at a Los Angeles awards show and conference organized by Billboard, the weekly paper of the music business. After hobnobbing with "content people" from the record and movie industries, he realized that "the content people have no clue. I mean, no clue. The cost of bandwidth is going down to nothing. And the size of hard drives is getting so big, and they're so cheap, that pretty soon you'll have every song you own on one hard drive. The content distribution industry is going to evaporate." Cohen said as much at the conference's panel discussion on file-sharing. The audience sat in a stunned silence, their mouths agape at Cohen's audacity.

That makes me really wonder about that so-called industry. How can they not see it changing before their eyes? Do they not know about their own kids downloading music and movies on-line? I guess they're just too locked in to their way of seeing the world. Like Ricky Fitts says in American Beauty, "Never underestimate the power of denial."

What exactly would a next-generation broadcaster look like? The VCs at Union Square Ventures don't know, though they'd love to invest in one. They suspect the network of the future will resemble Yahoo! or Amazon.com - an aggregator that finds shows, distributes them in P2P video torrents, and sells ads or subscriptions to its portal. The real value of the so-called BitTorrent broadcaster would be in highlighting the good stuff, much as the collaborative filtering of Amazon and TiVo helps people pick good material. Eric Garland, CEO of the P2P analysis firm BigChampagne, says, "the real work isn't acquisition. It's good, reliable filtering. We'll have more video than we'll know what to do with. A next-gen broadcaster will say, 'Look, there are 2,500 shows out there, but here are the few that you're really going to like.' We'll be willing to pay someone to hold back the tide."

Not enough people have been thinking about Television 2.0. Will it be the traditional broadcasters or the Internet companies who take the lead? My bet is on the Internet companies.

December 30, 2004

Firefox Downloads: 14 million and counting

Asa has posted a graph and write-up of the Firefox download numbers.

Not bad for a little Open Source project, is it?

December 28, 2004

Configuring Knoppix for Dad's Workstations

As noted earlier, I've been using Knoppix to get a pair of Linux boxes up and running for my father. Both sit on a private LAN (along with his notebook and a Deskjet color printer) via an old Netgear RP114 broadband router connected to the cable modem.

He uses a "Leave it to Beaver" theme for his machines. They are:

June

- ABit BP6 Motherboard with dual 500MHz Celeron (PII) CPUs

- 512MB PC100 RAM

- 80GB Disk

- Soundblaster Live

- 17" HP LCD Monitor

- Generic keyboard and mouse

Ward

- Pentium 4 1.8GHz CPU

- 512MB PC2700 RAM

- 2 250GB Disks in a Software RAID-1 configuration

- Integrated i810 audio

- 17" HP LCD Monitor

- Generic keyboard and mouse

The Plan

The goal was to configure both machines with a usable desktop so he could learn Linux. June will be the play system. If it gets messed up, no big deal. Ward will be the server that runs Samba and can store a bunch of the files that are also on his Thinkpad T40.

To make life easy, I turned to Knoppix 3.6 to get things started. It detected everything flawlessly, so I just had to partition each system (and configure the software RAID on Ward--that's a separate exercise) and then install Knoppix. I selected the "beginner" install, which gives you a multi-user Debian system while still retaining the fancy hardware auto-detection that Knoppix is famous for.

Once Knoppix was installed (2.6.7 kernal and all), the remaining task was to do a bit of post-installation customization and setup.

The Tweaking

I made a checklist that contained all of the things I needed to test, install, configure, or otherwise tweak. Here's the short version, partly for my records, and partly for the benefit of anyone else trying to do something similar.

Static IP addresses. I had to add a "nodhcp" parameter to the kernel at boot time. That involved editing /etc/lilo.conf and tacking that on to the end of the "append lines" for each kernel:

append="ramdisk_size=100000 init=/etc/init lang=us apm=power-off nomce nodhcp"

Then I ran /sbin/lilo to re-install the boot loader and rebooted to verify that it worked. Then I added a few lines to /etc/network/interfaces so that each machine got an address on his 129 192.168.2.0 network:

auto lo eth1

iface lo inet loopback

iface eth1 inet static

address 192.168.2.2

netmask 255.255.0.0

network 192.168.2.0

gateway 192.168.2.1

DNS. I edited /etc/resolv.conf so that it'd search the zawodny.com domain using his router as the first DNS server, and one of my coloated servers as the fallback:

search zawodny.com nameserver 192.168.2.1 nameserver 157.168.160.101

Update Packages. I next upgraded all the packages on the system. Luckily this is Debian, so it's easy with the magic of apt-get:

apt-get update apt-get -dyu dist-upgrade (marvel at the 500+ packages downloaded) apt-get dist-upgrade (answer occasional questions)

Firefox and Thunderbird. I needed to make sure he had the latest and greatest in the Mozilla world. Again, apt-get to the rescue:

apt-get install mozilla-thunderbird mozilla-firefox

That even added 'em to the KDE menu. :-)

Printer Setup. Both machines need to be able to print documents on the HP Deskjet 862C. Luckily, I could just use the printer config tool in the KDE Control Center to do that. It's a nice little front end to CUPS that made the process as easy as it would be on Windows (or easier?).

Numlock. For reasons that elude me, he wants the Numlock key enabled by default. Though the BIOS has this set already, something in Linux undoes that during the boot process. But the KDE Control Center makes that trivial again. The Keyboard config tool lets you adjust that as well as the key repeat delay, rate, etc.

sudo. We both need root access without having to type the root password. I used the visudo command to add two lines to /etc/sudoers:

jzawodn ALL=(ALL) ALL dzawodny ALL=(ALL) ALL

Clock Syncronization. The two systems didn't quite agree on time, so I manually set each using rdate and hwclock and then installed the ntp-simple package to handle keeping it in line:

rdate -s woody.wcnet.org hwclock --systohw apt-get install ntp-simple

Of course, the ntp-simple package is pre-configured to talk to a reasonable set of outside NTP servers.

SSH. For remote access, I needed to have sshd start at boot time. The cable router was already configured to forward traffic to TCP port 22 from the outside to Ward. That simple required a few symlinks from /etc/rcX.d/S20 to /etc/init.d/ssh.

Samba. The default /etc/samba/smb.conf file in Knoppix was nearly perfect for allowing home directories to be shared. I had to tweak two lines, changing a "no" to "yes" and vice-versa:

browseable = yes read only = no

Then I used smbpasswd to generate passwords for both of us:

smbpasswd dzawodny smbpasswd jzawodn

Finally, we used with XP notebook to visit \\ward.zawodny.com and \\june.zawodny.com to make sure he could map a drive to each.

Exim. I haven't done this yet, but I plan to get Exim4 running so that it can use the zawodny.com mail relay to send mail when necessary. It will use the existing TLS authentication which allows relaying for otherwise "unknown" hosts.

Ross on Family Tech Support

Ross provides his simple recommendations for the annual family tech support ritual otherwise known as the end of year holidays. In summary:

- Get 'em a Mac with OS X on it

- Get 'em broadband: it's fast and nearly always on

- Get 'em Firefox, 'cause IE is bad for your security

- Get 'em a good start page like My Yahoo or Google

- Get 'em on Web Mail like Yahoo Mail or GMail

- Get 'em on Flickr if they want to share photos

- Get 'em on Instant Messenger (I'd recommend AIM or Yahoo Messenger) and/or Skype

- Don't get 'em a blog

I mostly agree. I know that #1 is tricky and just won't fly in a lot of situations, but everything else applies nicely to a Windows box as well.

With that said, I'm off to setup my Dad's Linux machines. (Knoppix makes this pretty easy.)

See Also: How to fix Mom's computer.

December 26, 2004

Storage Costs Continue to Drop

After reading the /. story about IBM trying to build a 100TB tape drive, I found myself over on Pricewatch.com (which lamely uses frames and makes it hard to bookmark the page I want you to see). I like figuring out three things:

- Where's the current sweet spot in SATA drive prices?

250GB for $120 - How much does a Terabyte cost?

$480 buys you four 250GB disks - How much space can $1000 buy?

2TB and you'd have money left over to pay for shipping on the order

That's freakin' amazing!

I just wish laptop sized hard disks didn't lag so far behind. This 80GB disk seems downright tiny in comparison to what I could buy in a 3.5 inch form factor.

December 06, 2004

XML Weather Data Available

As Kevin notes, the release of NOAA Weather data in XML is a big deal. Not only will this make it easier for the smaller guys to start offering weather related services, it means that anyone with with a few free hours and some scripting skills can build the applications that nobody else offered.

Having written the Geo::METAR perl module ages ago (I'm so behind on patches, it's not even funny), I really get why having this in XML rather than some ancient non-human friendly format is important. The METAR spec is a pain to deal with. I'm honestly surprised that the FAA still mandates that pilots learn to read METAR coded weather reports.

Like Kevin, I also wish there was a REST interface to the data too. The old METAR feeds had that much going for them.

December 05, 2004

Enigmail: Easy E-Mail Encryption via Thunderbird

The enigmatic Troutgirl points at the Enigmail project which, aside from having a great name, may make some headway in getting e-mail encryption technology in front of the masses.

The enigmatic Troutgirl points at the Enigmail project which, aside from having a great name, may make some headway in getting e-mail encryption technology in front of the masses.

Enigmail is an extension to the mail client of Mozilla / Netscape and Thunderbird which allows users to access the authentication and encryption features provided by the popular GnuPG software (see screenshots). Enigmail is open source and dually-licensed under the GNU General Public License and the Mozilla Public License.

Having tried e-mail encryption of many flavors over the years, this sounds like it might just work. Integration has always been the killer. Either the system used a standard encryption tool such as PGP or now GPG, or it used a built-in system that didn't necessarily play nicely with others.

Enigmail seems to solve both of those problems.

Excellent.

Now, if only I could find a GUI mailer that could happily coexist with mutt (and, more importantly, my client-side Maildir e-mail storage).

December 03, 2004

Weblog topics, blogger micro-brands, and weblog classification

John Roberts notes that:

One of the oddities for me in thinking about blogs is that there is rarely a sense of someone staying On topic in the sense of traditional publications. If you read the Wall Street Journal, you know what you're going to get, and where you're going to get it, often to the column on the page. If you read CNET News.com, ditto...

With blogs, you're reading about individuals, and most of us have -- and share -- varied interests. Some folks even blog about those different interests in the same place, with or without categorization. That diversity -- all in the same blog -- is part of the appeal for me, but it sure makes it hard to categorize different voices into coherent groupings.

This that's something that a lot of people, especially those new to reading weblogs, just don't seem to get. They fail to see what's really happening. Many of us who write regularly are becoming our own micro-brands, just like magazine and newspaper columnists do. They build an audience that sometimes is loyal enough to follow them from publication to publication during their careers.

To the early adopters, among which I count myself, the names above often mean something, whether you know them as a person or not. Some of the labels stick. But the reason this still works is that the early adopters in the blogging world are still a small group, David Sifry's numbers be damned.

Well said.

I don't read Jon Udell's writing because he writes for Byte, Infoworld, or any other publication. I read his stuff because I like what he writes. He's a smart guy who writes interesting stuff. While his weblog is a lot more "professional" than mine or Tim Bray's (no offense, Tim--we both talk about non-work stuff regularly), the same reasoning applies.

Amusingly, he also uses me as an exmaple:

But how do you describe why you would read, for instance, Jeremy Zawodny's blog?

I won't attempt to answer that, but it does give me an idea for a little reader survey. I do wonder, among other things, how many of my blog readers also read my columns in Linux Magazine.

His other point, made later on, is that blog classification is difficult. When the new My Yahoo launched, I told Scott Gatz (and several others internally) how I was amused by finding myself listed in the Living & Lifestyles category of our little content directory that helps users to find content.

I'm no Martha Stewart, but Wil Wheaton and Jeff Jarvis are in there too, so whatever. I guess that's where bloggers go. Notice that in the Internet & Technology category, it's exclusively Yahoo content on the first page.

Even the surfers at Yahoo aren't sure what to do with us! :-)

November 20, 2004

Taking my backups more seriously...

After my most recent brush with Murphy (in which I lost the hard disk on my T23), I decided it was time to actually do something with my new SATA hard disk controller (the Promise Sata150 TX4 I asked about) and those three 250GB disks I bought.

Linux Archive Server

I've added the controller and three disks to the Linux box that has served as my workstation from time to time. It already has two 80GB IDE disks, a DVD-ROM drive, and a CD-RW drive. So I had to visit Fry's to pick up some power cable splitters. Luckily the case has a beefy power supply.

I've added the controller and three disks to the Linux box that has served as my workstation from time to time. It already has two 80GB IDE disks, a DVD-ROM drive, and a CD-RW drive. So I had to visit Fry's to pick up some power cable splitters. Luckily the case has a beefy power supply.

Using a Knoppix 3.6 CD, I've booted with the 2.6.7 kernel so that I can talk to the controller--my 2.4 kernel had no driver. I used cfdisk to put a primary partition on each, set the type to FD (Linux software RAID auto-detect), and created an /etc/raidtab that looks like this:

raiddev /dev/md1 raid-level 5 nr-raid-disks 3 nr-spare-disks 0 persistent-superblock 1 chunk-size 128 device /dev/sda1 raid-disk 0 device /dev/sdb1 raid-disk 1 device /dev/sdc1 raid-disk 2

Then I ran mkraid /dev/md1 to create the array. I built a ResierFS filesystem and let the array sync while I ate dinner.

From there, I ran an rsync to copy the data on the existing disks to the new array. With that done, I will wipe the the 80GB disks and create a RAID-1 array of them. It will house the operating sytem and home directories for the system. The larger RAID array will be mounted as /raid used to archive copies of files from my other machines.

Then I'll automate the process of using rsync to keep local copies of all my remote data on the RAID array. Given the available space, I'll likely use rsync snapshots to maintain several versions of each machine that I remotely clone.

The end result is roughly 460GB of usable space for backups and archives of those backups. Two years from now, I'll probably be able to swap in new disks to get 2TB of space for the same cost. And the old disks will still be under warranty.

Powerbook Backups

Inspired by Mark's posting on Super Duper, I finally found a solution that works well for my Powerbook. I've had an external Firewire disk: a Maxtor OneTouch 300GB model that I bought with the Powerbook. The disk came with Retrospect, but I've found the software to be rather lame.

Inspired by Mark's posting on Super Duper, I finally found a solution that works well for my Powerbook. I've had an external Firewire disk: a Maxtor OneTouch 300GB model that I bought with the Powerbook. The disk came with Retrospect, but I've found the software to be rather lame.

Super Duper does exactly what I need. It provides an easy way to incrementally update a full backup of my laptop--one that I can even boot from and restore with if it dies entirely. In other circumstances, I can simply mount the backup volume and grab the files I need.

It's definitely worth the $20 registration fee and highly recommended.

The Thinkpad

After IBM replaces the disk in the Thinkpad, I'll probably sell the machine. They seem to be fetching prices in the $800 neighborhood. It's still under warranty, has 768MB of RAM, and a Wifi card with a great antenna.

After IBM replaces the disk in the Thinkpad, I'll probably sell the machine. They seem to be fetching prices in the $800 neighborhood. It's still under warranty, has 768MB of RAM, and a Wifi card with a great antenna.

That means I'll probably be running SeeYou and the software required to talk to my LX5000 flight computer on my company laptop (all praise the company!). I'll probably take two approaches to backing it up, aside from whatever the standard procedure at work is.

First, I'll use a Knoppix CD to simply copy the entire hard disk over to my archive server once in a while. Since that's a pretty brute force approach, I'll probably backup the most important data on a more regular basis. To do that, I'll probably revive my batch file scripting skills just long enough to set something up. It'll copy the important stuff over to the archive server either using Samba or WebDAV through Apache and mod_dav.

Any advice on this?

Laptop Stolen!

One thing I hadn't thought of is the possibility of my laptop being stolen. It recently happened to Rasmus.

My nice new T42p was stolen by some loser at a PHP conference in Paris. It is amazingly inconvenient to lose a laptop like this. It was from inside the conference hall and there was virtually no non-geek traffic there. If a fellow geek actually stole my laptop from a PHP conference then there is something seriously wrong with the world. You can steal my car, my money, my shoes, I don't really care, but don't steal my damn laptop!

Sigh.

I can't believe that someone at a PHP conference would steal the laptop of the guy who created PHP. Something is, indeed, wrong with this world.

It's more motivation to make sure my backups happen frequently and in a reliable manner.

Rasmus: if you need any advice on a Powebook, I know you won't be shy about asking.

November 14, 2004

Why do I taunt Murphy?

I should have known better. Earlier today when I suggested that I had paid my dues to Murphy, I thought I was being smart.

Not so.

I sat down to write my monthly MySQL column for Linux Magazine, planning to bang out a few month's worth covering MySQL Administrator and the MySQL Query Browser. But then I discovered that there's not Mac version of either one!

They do offer Linux and Windows versions. But my Linux "desktop" is offline for maintenance (that's a polite way of saying I need to perform a disk swap and re-install). But I have a Windows laptop!

Except that my trusty IBM Thinkpad T23 has just decided that its hard disk is no longer interested in functioning without making loud *click* *click* *click* noises and refusing to let Windows complete the boot sequence.

The bad news:

- I know exactly what it means when hard disks begin that clicking routine: You're already screwed.

- I still have an article to write, so I either need a new topic or need to get a Linux box up ASAP.

- I have no backups.

- I spent the last several hours (has it been 3 hours?!) attempting to salvage the machine. I'm pretty convinced that's a lost cause at this point.

The good news:

- I only used it for running a single piece of software, so the lack of backups is not a major problem.

- The machine is probably still under warranty, so IBM will likely replce the hard disk at their expense.

- This may finally motivate me to sell that machine--once the new drive is installed with a fresh OS on it. I recently got a Windows notebook at work that's quite capable of running SeeYou.

Oh, well. I've learned my lesson.

Again.

Anyone got an idea for the MySQL column I need to write in the next 24 hours? :-(

November 09, 2004

Firefox 1.0 on Mac OS X: It's all good!

A while back I got a fair amount of traffic to my Firefox on Mac OS X Annoyances posting, iin which I complained about the screwed up key bindings and other things.

A while back I got a fair amount of traffic to my Firefox on Mac OS X Annoyances posting, iin which I complained about the screwed up key bindings and other things.

The good news it that with the release of 1.0, that's been fixed! It minimizes just like it should. I'm finally able to switch from Safari to Firefox as my default browser on Mac OS X--just like I use on Linux, BSD, and Windows.

As I said in my Is Firefox for Seearch? post on the Yahoo Search blog, thanks to the worldwide Firefox developer community. This is a real milestone. We've come a long way since the great disappointment.

November 01, 2004

Ubiquity in the Internet Age

These are some half thought out ideas I've been considering recently...

Why is Microsoft the big and successful company is today? One reason goes back to the original vision that Bill Gates was preaching back in the day. While I couldn't find an exact quote on-line, it was something like this:

A PC in every home and on every desktop running Microsoft software.

Given their nearly complete dominance of the market for PC operating systems and office productivity software, they've come shockingly close to achieving that vision--at least in some countries.

Yesterday's Lesson

Bill realized early on that there was great power (in many forms) to be had in getting on as many desktops as possible. The resulting near monopoly has allowed them to crush competition, make record profits, and enter other lines of business as a force to be reckoned with.

As the installed base of Microsoft software grew during the late 1980s and early 1990s, their ability to extract more money from it increased even more. Their growing power and abuse of it resulted in the famous anti-trust case brought by the United States Department of Justice.

The lesson was clear: To become ubiquitous was to become insanely profitable and powerful.

In Today's World

But we live in a different time. The PC is no longer the only battleground. The Internet is the new medium and it has the effect of leveling the playing field. While this isn't a new insight, let me say it in two specific ways:

- The web enables infinite distribution of content without any special effort or infrastructure.

- The web extends the reach of our apps and services as far as we're willing to let them go.

Both notions come back to ubiquity. If your stuff (and your brand) is everywhere, you win. The money will follow. It always does.

The closer to everywhere you can reach, the better off you'll be.

Where is everywhere?

The notion of everywhere has changed too. It's not just about every desktop anymore. It's about every Internet-enabled device: cell phone, desktop, laptop, tablet, palmtop, PDA, Tivo, set-top box, game console, and so on.

Everywhere also includes being on web sites you've never seen and in media that you may not yet understand.

What to do?

So how does a company take advantage of these properties? There are three pieces to the puzzle as I see it:

- do something useful really really well

- put the user in control by allowing access to your data and services in an easy and unrestricted way

- share the wealth

It sounds simple, doesn't it? Unfortunately, there are very few companies who get it. Doing so requires a someone with real vision and the courage to make some very big leaps of faith. Those are rare in today's corporate leadership. Startups are more likely to have what it takes, partly because they have less to lose.

Let's briefly look at those three puzzle pieces in more concrete terms.

Kicking Ass and Taking Names

Without a killer product, you have no chance. Three companies that come to mind here are Amazon.com, Google, and eBay. Each has one primary thing they do exceptionally well--so well that many users associate the actions they represent with the companies themselves.

Need to buy a used thingy? Find it on eBay. Looking for some random bit of information? Google for it. Shopping for something? Check Amazon.com first.

The financial markets have rewarded these companies many times over for doing what they do very well. And users love them too.

Web Services and Syndication (RSS/Atom)

Giving users the ability to access your data and services on their own terms makes ubiquity possible. There are so many devices and platforms that it's really challenging to do a great job of supporting them all. There are so many web sites on which you have no presence today. By opening up your content and APIs, anyone with the right skills and tools can extend your reach.

Two good examples of this are Amazon.com and Flickr, the up and coming photo sharing community platform. Amazon.com provides web services that make it easy to access much of the data you see on their web site. With that data, it's possible to build new applications or re-use the content on your own web site. The end result is that Amazon sells more products. It also reinforces the idea of Amazon being the first place to look for product information.

Flickr provides RSS and Atom feeds for nearly every view of their site (per user, per tag, per group, etc.) and also has a simple set of APIs on which anyone can build tools for working with Flickr hosted photos. The result is that Flickr is becoming increasingly popular among early adopters and the Flickr team doesn't have to build tools for every platform or device in the world. (Of course, it helps that their service is heads and shoulder above other photo sharing services like, say, Yahoo! Photos.)

Giving users the freedom to use data and services they way they want gives them a sense of ownership and freedom that few companies offer. It helps to build some of the most loyal, passionate, and vocal supporters. And some of them will put your data to work in ways you never dreamed of.

If this stuff sounds familiar, maybe you picked up on the Web 2.0 vibe?

Affiliate Programs

The final ingredient is money. It's the ultimate motivator. If there's a way to let your users help you make more money (there probably is), you need to find it, do it, and give those users a cut of the action. Affiliate programs are one way of doing this, but not the only way.

Amazon.com has been doing this for a long time now. Their affiliate program provides an easy way to earn credit at Amazon in return for leads that result in sales. Affiliates advertise or promote products that Amazon sells and provides the referral link.

eBay provides cash if you refer a bidders to their auctions. However eBay's program hasn't resulted in the sort of huge adoption one might expect. I won't speculate on the cause of that here.

A relative newcomer, Google's AdSense program has provided thousands and thousands of small publishers with cash on a monthly basis in exchange for advertising space on their sites. Oh... and a bit of Google branding too.

Companies that do all Three

Let's briefly look at three companies that are exploiting all three of these ideas.

Amazon.com built the gold standard of on-line shopping. They followed up by providing easy to use web services that allow anyone to get at much the data on Amazon's web site. This, coupled with their affiliate program, gives them very wide distribution and a good chance at capturing the long tail of users on the Internet.

eBay's auction platform is used by millions of people every year to buy and sell anything you can think of. Their incredibly large audience has served to cement their lead in this area over the last few years. Sellers have access to an API that makes listing their goods trivial. Hopefully they'll begin to offer RSS feeds or very simple web services aimed at making their listings more accessible to the other half: buyers or small publishers who'd like to refer them. That could greatly enhance their reach into the world of users who'd be willing to pimp a few eBay auctions if they can get a percentage of the sale.

Google followed the same model too. They began by setting the new standard for how web search should look, feel, and work. With that position solidified, they rolled out a web service to provide access to their search results. They also launched their wildly successful AdSense program. The fact that Google's ads are contextually relevant without any extra effort on the part of the publisher means puts them in the lead position to monetize that long tail.

User Generated Content

I didn't list this separately as a necessary ingredient because it's really part of point #1, building a great service. However, it's worth calling it out here to reinforce its importance. As you look around the web to see which services you use over and over, it can be hard to truly appreciate the effects of user generated content.

Amazon? Sure, they have reviews and ratings of products. But look deper. There's wish lists, the recommendation engine (it would be useless without without data from others), list mania, and more.

Flickr? Users own the photos. But they also do the tagging, organize the photos, leave comments, form groups, and so on. Flickr provides the platform.

The more your service can be affected by user input, the more users are likely to come back again and get involved. This is personalization taken to the next level.

What's this all mean?

We're in the early days of all this, so there are still huge opportunities. Luckily a few companies have shown us the way--the new formula that works. But they each have room to improve.

Who will be next on the list?

Beats me. Your guess is as good as mine. But I'd like to see Yahoo on that short list by this time next year. Microsoft and AOL both have potential but I've seen little evidence from either. Apple is an interesting case. With iTunes, the iPod, and the iTunes Music Store, they've done #1 and #3 but really need to figure out how to open up their stuff. Netflix has done #1, part of #2, and none of #3 yet (that I've seen.)

In the blogging world, Google's Blogger has hit all three of the requirements. TypePad from SixApart has #1 and #2 nailed. I wonder what they'll do about #3. Maybe just AdSense integration?

In Summary

Go forth, build a great service, open it up, and share the money. The best services will win. And so will the users.

See Also:

October 18, 2004

Wanted: Web Services Geek for Yahoo Search

Yahoo Search is looking for a Web Services Geek. Here's the official job pitch:

Come be a part of a team working to revolutionize the search experience for our users. We are looking for a highly motivated and experienced engineer who is passionate about Web Services, platforms, and enabling a new generation of search-based applications on the Internet. Y! Search has a wealth of content and services, and we want to make it accessible to more people, more devices, and more applications.

I won't bore you with the whole job listing. Instead, I'll summarize by saying this: Ideally, you'll have a good deal of Web Services experience and terms like REST, SOAP, RDF, and WSDL don't scare you. Of course, programming is a necessary part of the job too. Perhaps you've got experience in a compiled language (C/C++/Java) and a scripting language (Perl/PHP) in a Unix/Apache environment? That'd be perfect.

If this sounds interesting, please send me your resume in a non-Microsoft Word format. ASCII, PDF, and HTML work well. If you have a record of your experience with or interest in web services on your blog or a public mailing list, point me at that too.

This full-time position is on-site at Yahoo's headquarters in Sunnyvale, CA. As with many engineering jobs here, telecommuting happens but you're going to be in the office on a regular basis too.

Feel free to ask questions in the comments section. I'll answer what I can. Or e-mail me if you're not comfortable asking in public.

October 17, 2004

Greylisting Opinions and Options?

I'm considering a greylisting setup for WCNet.org to help slow the influx of spam that we have to run thru the backend spamd scanners. It's pretty bad these days. I've read a fair amount about the topic, but figured I'd ask here for any gotchas or horror stories.

As a point of reference, my implementation will probably be Exim 4.xx and greylistd.

On the hardware side, the main mail server is dual processor Sun 280R with 1GB RAM. Exim hands messages off to procmail which calls SpamAssassin via the spamc client. There are currently 4 backend boxes that host spamd processes.

On a slow day, we handle 150,000 messages. On busier days it's closer to 250,000.

I considered just using Spey, since a greylisting SMTP proxy would drop in very easily, but it doesn't seem to be very battle tested yet.

We're staying with Exim, so please don't suggest a non-Exim solution. Thanks for any input on this.